In this post we describe the Nyström method for finding the eigenvalues and eigenfunctions of a kernel function. This has applications in a wide range of settings: from Gaussian process modeling to functional data analysis to kernel methods. We first describe the motivation in terms of finding the posterior mean of a Gaussian processes. Next we describe how having access to the eigenvalues and eigenfunctions of the kernel can help us with a faster approximate solution. We then frame the eigenproblem in terms of an integral equation and describe the Nyström method.

Motivation

In many problems using kernels in machine learning, the naive solution involves inverting a matrix computed by evaluating a kernel at all combinations of observed points. For instance, say you want to estimate the posterior mean of a Gaussian process, where the prior has mean ![]() and covariance function

and covariance function ![]() , where

, where ![]() and

and ![]() are two time points. If

are two time points. If ![]() are the set of

are the set of ![]() training time with observations

training time with observations ![]() and

and ![]() is the set of test time points, then the posterior mean is

is the set of test time points, then the posterior mean is

(1) ![]()

However, this involves forming the covariance matrix between all time points ![]() and inverting it, an

and inverting it, an ![]() operation. The same issue comes up in kernel ridge regression, and very similar issues come up in other kernel settings.

operation. The same issue comes up in kernel ridge regression, and very similar issues come up in other kernel settings.

A reasonable question is: can we approximate the inverse ![]() with a lower order dependence on

with a lower order dependence on ![]() ?

?

Approximating the Inverse

Mercer’s Theorem

The key idea towards approximating the inverse efficiently is approximating the kernel. To do so we recall Mercer’s theorem, which describes how we can express a continuous symmetric positive semi-definite kernel in terms of eigenvalues and eigenfunctions.

Theorem: (Mercer’s Theorem) Let ![]() be a continuous symmetric positive semi-definite kernel on the space

be a continuous symmetric positive semi-definite kernel on the space ![]() with measure

with measure ![]() . There exists an orthonormal basis

. There exists an orthonormal basis ![]() and corresponding non-negative weights

and corresponding non-negative weights ![]() such that

such that

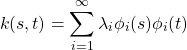

(2)

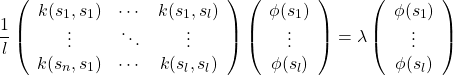

where ![]() , the eigenvalues and eigenfunctions, respectively, are the solutions to

, the eigenvalues and eigenfunctions, respectively, are the solutions to

(3) ![]()

Using Mercer’s Theorem to Approximate the Inverse

Since the ![]() in order for the series to converge, if we knew the eigenvalues and eigenfunctions we could approximate the kernel with a finite sum

in order for the series to converge, if we knew the eigenvalues and eigenfunctions we could approximate the kernel with a finite sum

(4)

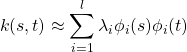

We can then express the matrix

(5)

This is a sum of rank one matrices and can be cheaply inverted using Woodbury’s matrix identity. However we do not have direct access to the eigenvalues and eigenfunctions. The Nyström method involves using an approximation to the integral in order to approximate them. We can then use the approximate eigenpairs to approximate the kernel matrix as the above sum of rank one updates, which can be cheaply inverted.

Nyström Method

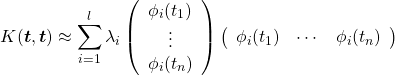

The Nyström method involves approximating ![]() by sampling

by sampling ![]() from

from ![]() and then solving

and then solving

(6)

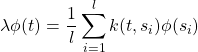

Substituting ![]() with

with ![]() gives us

gives us

(7)

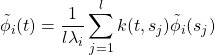

We can then find ![]() for

for ![]() , approximations to the true eigenpairs by solving for the eigenvalues and eigenvectors of the scaled kernel matrix. However, we want

, approximations to the true eigenpairs by solving for the eigenvalues and eigenvectors of the scaled kernel matrix. However, we want ![]() evaluated at arbitrary points

evaluated at arbitrary points ![]() . We obtain this via

. We obtain this via

(8)

Putting it All Together

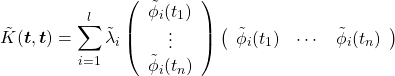

Recall that we have approximated the kernel ![]() with a finite sum, but we lacked

with a finite sum, but we lacked ![]() and

and ![]() . We now have approximations to them that we can plug in from Nyström’s method. We can then compute an approximate kernel matrix using

. We now have approximations to them that we can plug in from Nyström’s method. We can then compute an approximate kernel matrix using

(9)

Now recalling that

(10) ![]()

We can approximate

(11) ![]()

where the term ![]() is inexpensive to compute.

is inexpensive to compute.