Background

Classical statistics was developed to study how to collect and analyze data in the setting of controlled studies. However, often it is expensive, unethical, or impossible to conduct an experiment. For example, say you want to test whether cocaine dosage affects heart rate. Running a controlled experiment where you control cocaine dosage would be highly unethical. You can however, potentially collect observational data from both people already taking cocaine and those not taking it, and analyze that. This poses new challenges to data analysis: particularly when doing inference rather than prediction. Many of the techniques for analyzing observational data come from Econometrics, where conducting controlled Economic experiments is often infeasible.

As a modeling example of this, consider linear regression: you have a design matrix ![]() , where each

, where each ![]() is a feature vector associated with a single observation, and a vector of responses

is a feature vector associated with a single observation, and a vector of responses ![]() , and you want to model your data via linear regression

, and you want to model your data via linear regression

(1) ![]()

We want to do three things: 1) estimate ![]() 2) test whether

2) test whether ![]() is significantly different from

is significantly different from ![]() 3) potentially predict

3) potentially predict ![]() on new features

on new features ![]() .

.

In classical statistics, you assume your ![]() are fixed quantities. You can think of each

are fixed quantities. You can think of each ![]() as a vector of knobs that are controlled by the person running an experiment. For instance, you might want to estimate the effect of different dosages of a medicine. In this case,

as a vector of knobs that are controlled by the person running an experiment. For instance, you might want to estimate the effect of different dosages of a medicine. In this case, ![]() is two-dimensional: the first dimension handles the intercept while the second is the dosage, which is controlled by the experimenter. On the other hand, if we collect a random sample of people taking cocaine, we don’t control how much cocaine they take, and thus the

is two-dimensional: the first dimension handles the intercept while the second is the dosage, which is controlled by the experimenter. On the other hand, if we collect a random sample of people taking cocaine, we don’t control how much cocaine they take, and thus the ![]() is a realization of a random variable.

is a realization of a random variable.

How Do Assumptions Change

Traditionally, linear regression when applying ordinary least squares (OLS) has the following assumptions in the setting of fixed features. When these assumptions hold, we obtain the best linear unbiased estimator (BLUE) via the Gauss Markov theorem and also can derive correct test statistics.

- Linearity

- Homoskedasticity and uncorrelated errors,

has full rank

has full rank- In some cases for hypothesis testing we assume

However, when we move to the observational setting, the assumptions become conditional on ![]() .

.

- Linearity

- Exogeneity:

- Homoskedasticity and uncorrelated errors,

has full rank

has full rank- In some cases we assume

The important one, which was bolded, was exogeneity. Intuitively, it means that we avoided some of the common issues that cause the causality to be wrong (but doesn’t necessarily mean that the causality is right!). Importantly, exogeneity is needed for our estimator to be BLUE and (usually) for consistency to hold when applying OLS, so that we have convergence (in probability) to the true parameters in large samples. The alternative to exogeneity is endogeneity. A great intuitive discussion of what exogeneity and endogeneity mean is here https://stats.stackexchange.com/questions/59588/what-do-endogeneity-and-exogeneity-mean-substantively.

Two Implications of Exogeneity

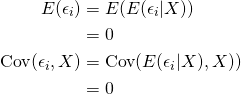

One often sees that exogeneity implies that ![]() or

or ![]() . To see why, note

. To see why, note

(2)

thus we can look for model specifications that imply ![]() to find violations of exogeneity.

to find violations of exogeneity.

When Does This Matter?

Inference

When doing inference this is a key issue. As mentioned above, in the presence of endogeneity one often finds that ordinary least squares is both biased and inconsistent. This implies that not only is our estimator biased, we also can’t use it for hypothesis testing as the test statistics, even in large samples, will be wrong. It is important to address this issue for inference purposes.

What Leads to Endogeneity?

There are a variety of causes of endogeneity, but two of the most common are omitted variables and measurement errors.

Omitted Variables

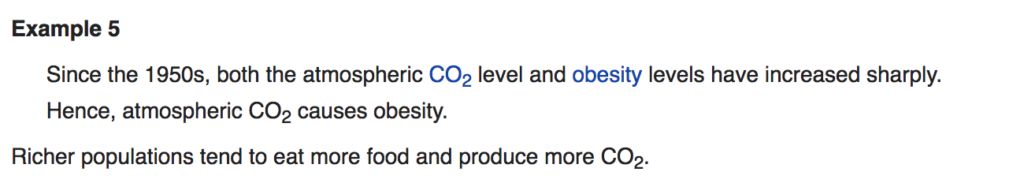

You’ve probably heard the phrase ‘correlation does not imply causation.’ Omitted variables are a key part of the statement. In wikipedia’s article on the subject, they show the following example:

If we regress obesity on CO2 levels, we’ll likely get a positive coefficient (there are issues with the temporal structure but let’s ignore those for now). However, if we include the wealth of the population as a feature, the effect is likely to disappear. Thus the omitted variable likely leads to overestimation of the effect we originally estimated.

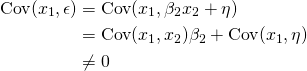

Let’s look at this mathematically (example is from Coursera’s econometrics course). Say we have two features ![]() and

and ![]() and the true model is

and the true model is

(3) ![]()

Now assume we ignore ![]() and regress

and regress ![]() , then if

, then if ![]() is correlated with

is correlated with ![]() and

and ![]() , we have that

, we have that

(4)

and thus we have endogeneity. Note that in this case, endogeneity is actually caused by model misspecification.

Measurement Error

Another cause of endogeneity is measurement error. Let’s say that ![]() is the treatment effect but we can’t accurately measure the dosage

is the treatment effect but we can’t accurately measure the dosage ![]() , and instead observe

, and instead observe ![]() , where

, where ![]() is some mean-zero random variable, and we fit

is some mean-zero random variable, and we fit ![]() . In this case we will have endogeneity and OLS loses its consistency properties.

. In this case we will have endogeneity and OLS loses its consistency properties.

How Do We Deal With This?

If we wish to recover a consistent estimate of the parameters ![]() , then we need to study instrumental variables. We will discuss these in a future post.

, then we need to study instrumental variables. We will discuss these in a future post.