These are solutions to the intuition questions from Stanford’s Convolutional Networks for Visual Recognition (Stanford CS 231n) assignment 1 inline problems for KNN.

Inline Question #1:

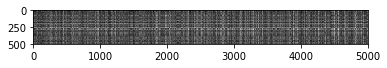

Notice the structured patterns in the distance matrix, where some rows or columns are visible brighter. (Note that with the default color scheme black indicates low distances while white indicates high distances.)

- What in the data is the cause behind the distinctly bright rows?

- What causes the columns?

Your Answer: fill this in.

- Either this is an observation from a class not in the training data set, or is at least very different from all/most of the training data, probably in terms of background color.

- This training data point doesn’t have any similar points in the test set.

Inline Question 2

We can also other distance metrics such as L1 distance.

The performance of a Nearest Neighbor classifier that uses L1 distance will not change if (Select all that apply.):

- The data is preprocessed by subtracting the mean.

- The data is preprocessed by subtracting the mean and dividing by the standard deviation.

- The coordinate axes for the data are rotated.

- None of the above.

Your Answer:

1., 2.

Your explanation:

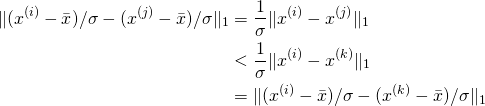

- Consider

(1)

Then distances are preserved under subtraction of the mean. - Assume

(2)

then(3)

and thus ordering of distances is preserved under subtraction of mean and dividing by standard deviation. - Does not hold. Consider three points

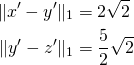

. Then

. Then(4)

so that and

and  both have the same distance from

both have the same distance from  . Now consider the

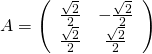

. Now consider the  degree rotation matrix

degree rotation matrix(5)

Then(6)

so that(7)

and thus . l1 distance ordering is then not preserved under rotation.

. l1 distance ordering is then not preserved under rotation.

Inline Question 3

Which of the following statements about ![]() -Nearest Neighbor (

-Nearest Neighbor (![]() -NN) are true in a classification setting, and for all

-NN) are true in a classification setting, and for all ![]() ? Select all that apply.

? Select all that apply.

- The training error of a 1-NN will always be better than that of 5-NN.

- The test error of a 1-NN will always be better than that of a 5-NN.

- The decision boundary of the k-NN classifier is linear.

- The time needed to classify a test example with the k-NN classifier grows with the size of the training set.

- None of the above.

Your Answer:

1., 4.

Your explanation:

- This is true. If you use the training data set as the test set, then with one nearest neighbor, if given a point x, the nearest neighbor will be the exact same point and thus the error will be

. For 5-NN,

. For 5-NN,  is a lower bound.

is a lower bound. - False. Consider a 1d example. You have

and

and  . Now consider a new point with

. Now consider a new point with  and

and  . Then this will have test error

. Then this will have test error  for 1-nn and

for 1-nn and  for

for  .

. - No. Consider two classes, one is in the shape of a moon and the other surrounds the moon. Then the decision boundary will have approximately the shape of a moon.

- This is true. At test, KNN needs to make a full pass through the entire data set and sort points by distance. The time needed thus grows with the size of the data.