I’d often seen two different versions of Cauchy Schwartz (CS). In analysis and linear algebra I’d learned that if ![]() are elements of a vector space

are elements of a vector space ![]() we have

we have

(1) ![]()

while in probability and statistics I’d learned that for random variables ![]() we have

we have

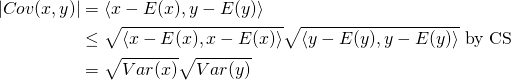

(2) ![]()

I’d seen proofs of both but not in a way that tied them together. Today, I learned how they’re related and it’s super simple. Define ![]() . One can verify that this satisfies the definition of an inner product. Then we have

. One can verify that this satisfies the definition of an inner product. Then we have

(3)

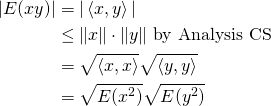

it seems so obvious but I’d never realized this previously. Further, the statement

(4) ![]()

becomes really easy to prove using the inner product defined above.

(5)